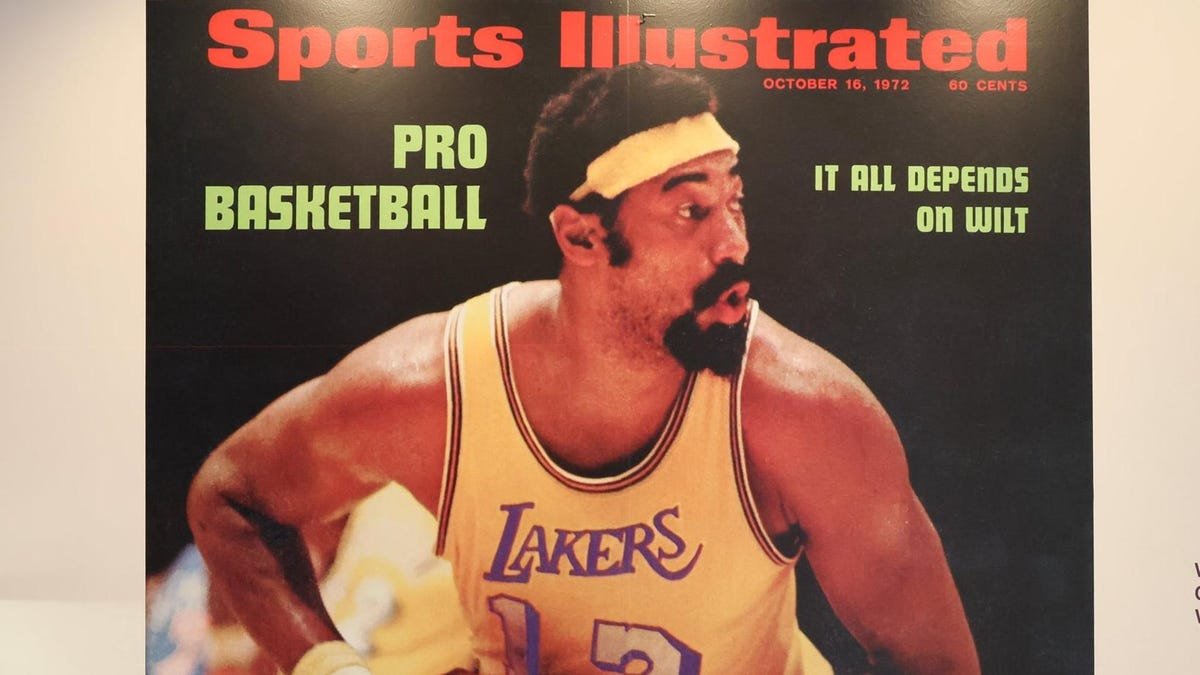

The rapid integration of artificial intelligence (AI) into various aspects of society has led to a surreal state of journalism in 2023. As everyone fumbles around in the dark, trying to navigate AI-generated content, the debate surrounding its use has just begun. One media outlet, Sports Illustrated (SI), took a controversial approach to AI-generated content that damaged its reputation and undermined the trust of its readers.

SI, known for its long-form journalism and reputation in the industry, attempted to sidestep the debate by using AI to generate content. However, they went far beyond the norms of current media standards. The Arena Group, SI’s current management, created fictional writers with fabricated bios and bylines. They even used AI-generated headshots for these imaginary staff writers. The bios read like generic descriptions of happy-go-lucky individuals, illustrating the AI’s perception of humans.

The Arena Group rotated these fictional writers with made-up bios to avoid detection. They also extended this deceptive practice to other outlets such as TheStreet, where they not only used fictional writers but also dispensed bad personal finance advice. The entire operation was akin to the meme of Steve Buscemi pretending to be a high school student, saying, “How do you do, fellow humans?”

The use of AI-generated content itself may not be unethical, but it becomes problematic when done behind a veil of deception. Readers rely on bylines to determine the credibility of the content they consume. When media organizations mislead their readers about the origins of their content, trust is eroded. It becomes difficult for readers to discern what is genuine and what is artificially generated.

The Arena Group’s attempt to delete the AI-generated content and cover up their actions only added to the mistrust. In an industry already facing uncertainty and external attacks, such dishonest practices further damage the reputation of journalism.

Fortunately, human reporting revealed the truth and forced The Arena Group to end its partnership with Advon Commerce, the third-party provider of the branded content. However, this incident raises concerns about the potential future misuse of AI in journalism. The idea of AI-generated SI Swimsuit Issue cover models may seem far-fetched, but in this rapidly evolving landscape, it is essential to remain vigilant and uphold ethical standards.

As AI continues to advance and integrate into society, it is crucial for media organizations to approach its use with transparency and integrity. Bylines should accurately represent the authors of the content, and readers should be able to trust the Fourth Estate to provide accurate and reliable information. The industry must navigate this avant-garde terrain carefully, ensuring that AI-generated content does not undermine the foundations of journalism and engender further mistrust among readers.